Keyword [RDN] [RDB]

Zhang Y, Tian Y, Kong Y, et al. Residual dense network for image super-resolution[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 2472-2481.

1. Overview

1.1. Motivation

- Most SR models do not make full use of the hierarchical feature from the original LR image

- Hierarchical features give more clues for reconstruction

- Interpolation of LR image will increase computation and lose details of LR image

- Higher growth rate of dense block can improve performance but hard to train

In this paper, it proposed residual dense network (RDN) consist of residual dense block (RDB)

- contiguous memory mechanism (CM)

- local feature fusion (LFF). deal with high growth rate

- global feature fusion (GFF)

- local residual learning (LRL)

- global residual learning (GRL)

1.2. Related Work

- VDSR, IRCNN. residual learning

- DRCN, DRRN. recursive learning

Memnet. memory block

ESPCN. sub-pixel

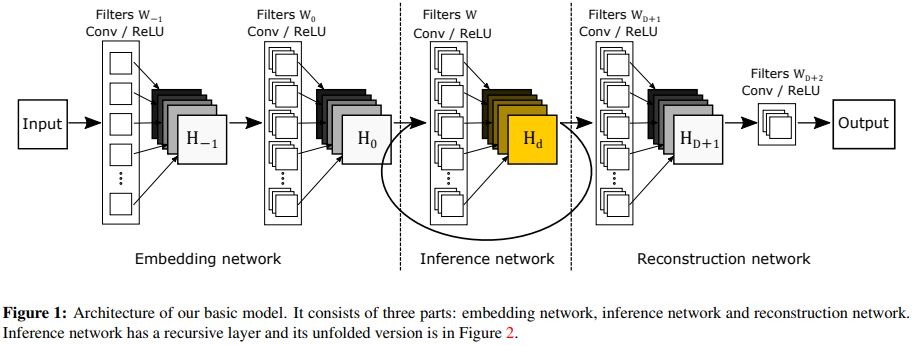

1.3. Architecture

contains four parts

SFENet (shallow feature extraction net)

RDBs (residual dense blocks)

- DFF (dense feature fusion)

contains GFF (1x1 for fusion, 3x3 for extraction effective in another paper).

- UPNet (up-sampling net)

inspired by CVPRW 2017

1.4. RDB

- CM

passing the state of preceding RDB to each layer of current RDB.

LFF

find that as G grows, very deep dense nerwork without LFF would be hard to train.

LRL

1.5. Details

- remove BN (performance and memory)

- remove pooling

- L1 loss

- self-ensemble method

1.6. Dataset

- DIV2K (800 train, 100 valid, 100 test)

- 5 benchmark dataset for testing (Set5, Set14, B100, Urban100, Manga109)

1.7. Degradation Model

- bicubic

- Gaussian kernel

- bicubic + Gaussian noise

2. Experiments

2.1. Study of D, C, G

- D. number of RDB

- C. number of conv layers in RDB

- G. growth rate

the larger the better.

2.2. Ablation Study

2.3. Comparison

when scaling factor become larger, RDN not better for MDSR (depth, multi-scale, larger patch size).

Method using interpolated LR image would produce artifacts and blur.