Keyword [Semi-Automatic] [Polygon-RNN]

Castrejon L, Kundu K, Urtasun R, et al. Annotating object instances with a polygon-rnn[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017: 5230-5238.

1. Overview

1.1. Motivation

- Most current method treat object segmentation as a pixel-labeling problem

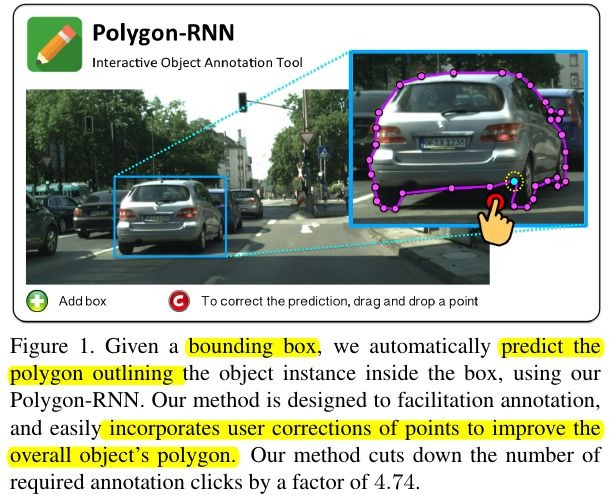

In this paper, it cast this segmentation task as polygon prediction

- proposed Polygon-RNN architecture for semi-automatic annotation

- speed up the annotation process by 4.7 times in Cityscapes dataset

1.2. Polygon-RNN

1.2.1. Input

- image crop

- vertices sequence

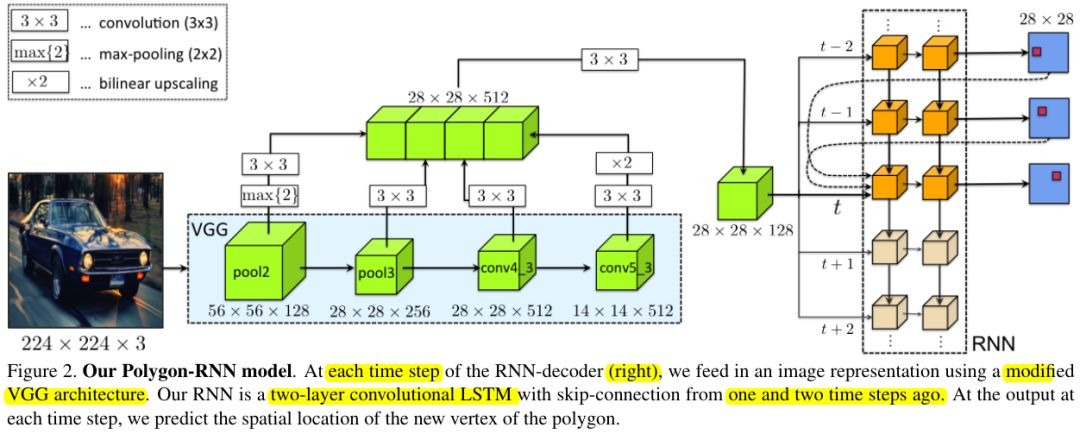

1.2.2. Feature Extractor

- modified VGG

- (boundary) low-level about the edge and corner

- (see object) high-level about the semantic information

- exploit bilinear interpolation or max-pooling before concat

1.2.3. RNN

- ConvLSTM. preserve spatial information; reduce parameters compared to FC-RNN

1.3. Related Work

1.3.1. Semi-automatic Annotation

- GrabCut. exploit annotation

- GrabCut + CNN

Most define a graphical model at the pixel-level which are hard to incorporate shape prior.1.3.2. Annotation Tool

1.3.3. Instance Segmentation

- pixel-level explicit box or patch

- produce polygon

1.4. Training Detail

- cross-entry at each time step of RNN

- feed t-1, t-2 gt to prediction t step

- for the first vertex prediction. train another CNN using multi-task loss

- 250 ms/img about inference time

- set chessboard distance threshhold T. If distance large than T, simulated human correction

1.5. Dataset

- Cityscapes

- KITTI

1.6. Data Process

- perform polygon simplification with zero error in the quantized grid. eliminate vertices which are in a line or fall into same grid

1.7. Data Augmentation

- random flip

- enlarged box 10%~20%

- random select starting vertex

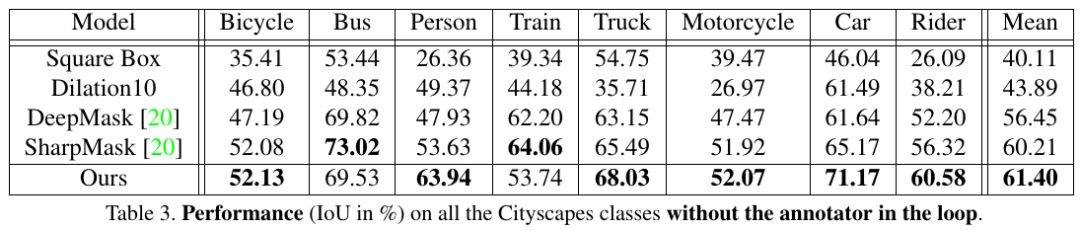

2. Experiments

2.1. Metrics

- IoU

- number of clicks

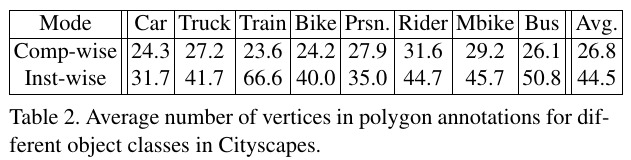

2.2. Step Limitation

- set max step to 70

- instance-wise. treat the entire instance as an example

- component-wise. treat each component as a single example

2.3. Result