Qi C R, Yi L, Su H, et al. Pointnet++: Deep hierarchical feature learning on point sets in a metric space[C]//Advances in Neural Information Processing Systems. 2017: 5105-5114.

1. Overview

- PointNet aggregates all individual point features to a global point cloud and does not capture local structures

- Point sets are usually sampled with varying densities

In this paper, it proposed PointNet++

- Hierarchical. learn local features with increasing contextual scales

- Adaptively combine features from multiple scales

1.1. Two Issues

- how to generate the partitioning of the point set (neighbourhood ball with centroid, farthest point sampling,FPS)

small neighbourhood may consist of too few points due to sampling deficiency, which might be insufficient to allow PointNet to capture patterns robustly. - how to abstract sets of points or local features through the local feature learner (recursively PointNet)

1.2. Related Work

- Hierarchical Feature Learning

- Deep Learning of Unordered Sets

- Point Sampling

- 3D representation. (volumetric grids and geometric graphs)

1.3. Datasets

- MNIST (2D objects)

- ModelNet40 (3D)

- SHREC15 (3D)

- ScanNet (real 3D scenes)

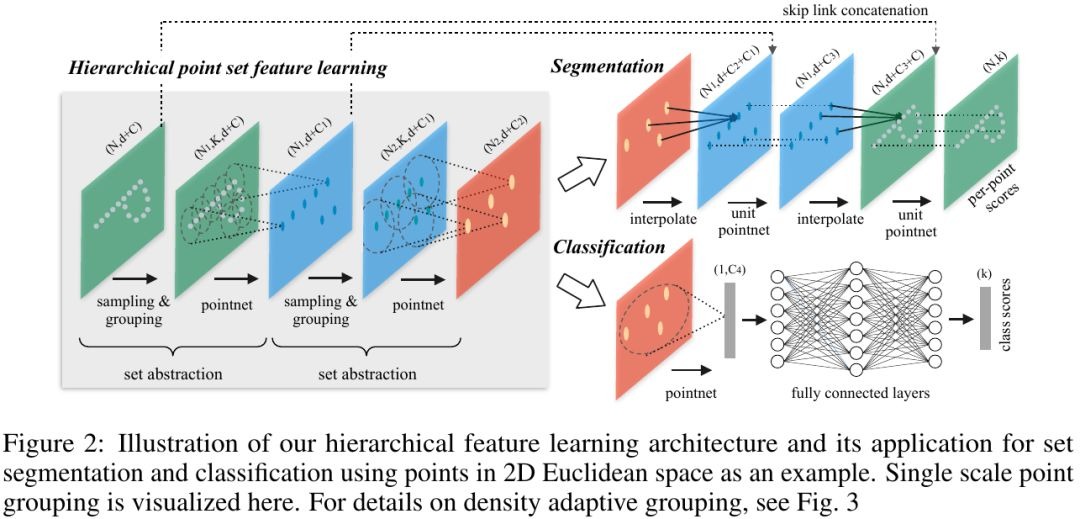

2. Network

2.1. Sampling Layer

Using farthest point sampling (FPS) to get the the coordinate of N’ centroids’.

- Input: [N x d], the coordinates of point set

- Output: [N’ x d], the coordinates of centroid points

2.2. Grouping Layer

Using ball query (or KNN) to group the point set based on centroid point set.

- Input: [N’ x d], centroid point set; [N x (d+C)], point set

- Output: [N’ x K x (d+C)], grouped point set

- Ball query guarantees a fixed region scale, but KNN can’t

- The coordinates of points in a local region are firstly translated into a local frame relative to the centroid point (to capture point-to-point relations in the local region)

2.3. PointNet Layer

- Input: [N’ x K x (d+C)]

- Output: [N’ x (d+C’)]

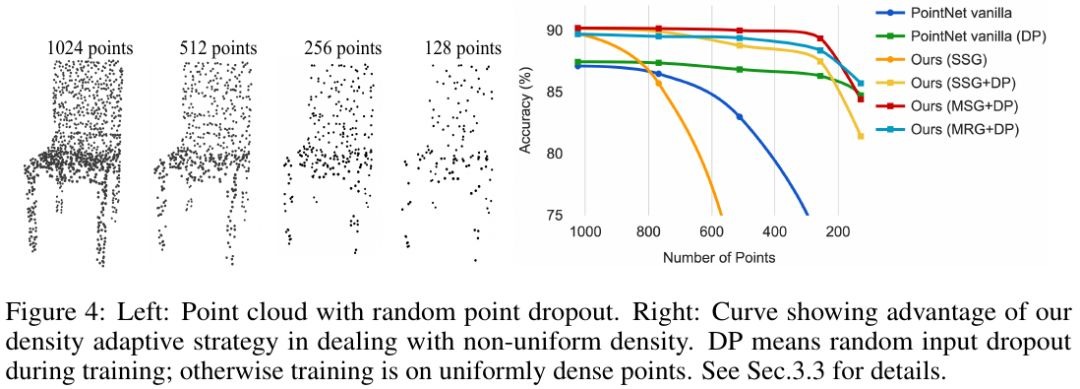

2.4. Robust under Non-Uniform Sampling Density

Features learned in dense data may not generalize to sparsely sampled region. In contrast, the same.

2.4.1. Sample Method (random input dropout)

[during training]

- for each training point set, draw a dropout rate Θ uniformly sampled from [0, p], p≥1

- for each point, randomly drop a point with probability Θ

In practise, set p=0.95 to avoid empty point set.

- various sparsity (induced by Θ)

- varying uniformity (induced by randomly dropout)

[during testing]

- keep all available points.

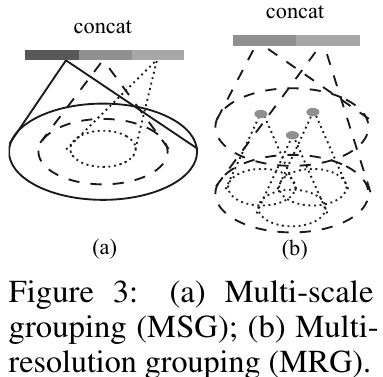

2.4.2. Solutions

each abstraction level extracts multiple scales of local patterns and combine them intelligently according to local point densitie

- Multi-scale Grouping (MSG)

- Features at different scales (processed by PointNet) are concat to form a multi-scale feature

- Drawbacks. computationally expensive (the number of centroid points is quite large at the lowest level)

- Multi-resolution Grouping (MRG)

- Left vector (a). processed by the set abstraction (sampling-grouping-PointNet, L-1 level-group-PointNet)

- Right vector (b). directly processed by the PointNet (L-1 level-PointNet)

- low densities. (a) may be less reliable than (b)

the subregion in computing (a) contains even sparser points and suffers more from sampling deficiency.

- high densities. (a) better

(a) provides information of finer details sincepossesses the ability to inspect at higher resolutions recursively in lower levels.

2.5. Point Feature Propagation for Segmentation

Set abstraction layer will subsampled the original point set, but the segmentation task need to obtain point features for all the original points.

- Using hierarchical propagation strategy with distance based interpolation (inverse distance weighted average based on KNN)

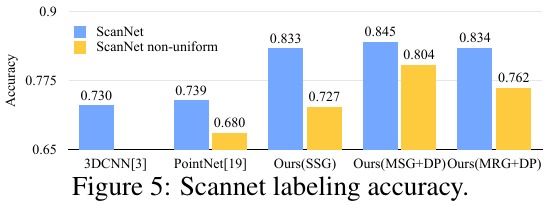

3. Experiments

3.1. Classification

Comparison

Robust to Sampling Density Variation

3.2. Segmentation

- Comparison