Keyword [Universal Adversarial Perturbations]

Poursaeed O, Katsman I, Gao B, et al. Generative adversarial perturbations[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 4422-4431.

1. Overview

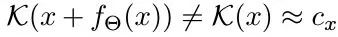

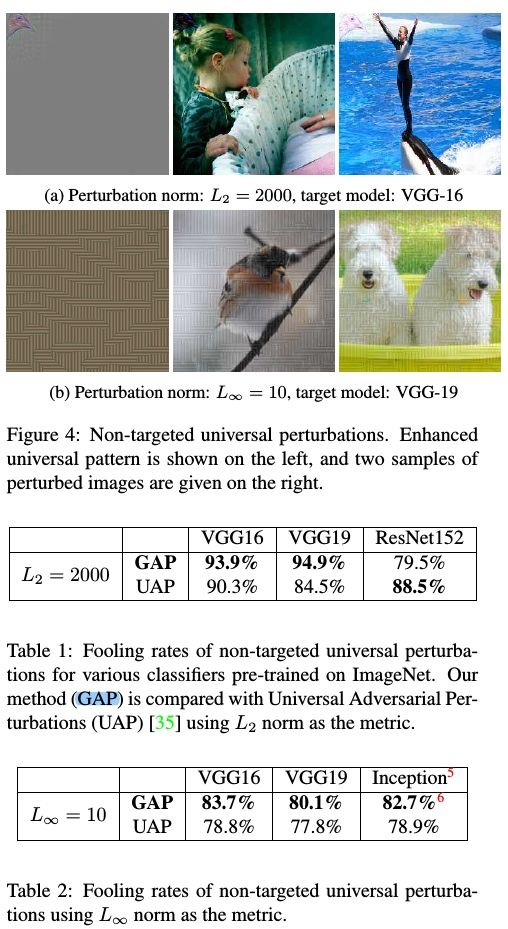

In this paper, it proposed generative models for creating adversarial examples

- can produce image-agnostic and image-dependent perturbation for targeted and untargeted attacks

- demonstrate that similar architecture can achive impressive results in fooling both classification and semantic segmentation models

- faster than iterative methods at inference time

1.1. Type of perturbation

- Universal. fixed

- Image-dependent. vary for different images

- targeted

- untargeted

1.2. Contribution

- unifying framework. universal and image-dependent

- state-of-art performance in universal perturbations

- first to present effective targeted universal perturbation

- faster than iterative and optimization-based methods, the order of milliseconds

1.3. Related Work

1.3.1. Universal Perturbations

- iterates over samples in a target set, aggregate image-dependent perturbation and normalize the results to build universal perturbation

- add image-dependent perturbations and clip the results

1.3.2. Image-dependent Perturbation

- optimization-based

- FGSM

- Iterative Least-Likely Class

- adversarial examples are sensitive to the angle and distance

2. Generative Adversarial Perturbation

2.1. Universal Perturbation

U. scale to have a fixed norm

trained with fooling loss. the combination of fooling and discriminatice loss lead to sub-optimal

2.2. Image-dependent Perturbation

- generate perturbation instead of adversarial example giving us better control over the perturbation magnitude

2.3. Fooling Multiple Network

3. Experiments

- L_{oo}. make use of the maximum permissible magnitude at each pixel