Keyword [Darts]

Liu H, Simonyan K, Yang Y. Darts: Differentiable architecture search[J]. arXiv preprint arXiv:1806.09055, 2018.

1. Overview

1.1. Motivation

1) existing methods are based on a discrete and non-differentiable search space

2) existing methods apply evolution, RL, MCTS, SMBO or Bayesian optimization

In this paper, it proposes DARTS

1) using gradient descent

2) continuous relaxation

3) remarkable efficiency improvement

1.2. Dataset

1) CIRFAR-10

2) ImageNet

3) Penn Treebank

4) WikiText-2

2. Differentiable Architecture Search

2.1. Cell

1) Directed acyclic graph with an ordered sequence of N nodes $x^{(i)}$, which means feature maps

2) Directed edge $(i, j)$. $o^{(i, j)}$

3) Edge type set $O$: ‘none’, ‘max_pool_3x3’, ‘avg_pool_3x3’, ‘skip_connect’, ‘sep_conv_3x3’, ‘sep_conv_5x5’, ‘dil_conv_3x3’, ‘dil_conv_5x5’

4) Mixed Operation. aggregate all edges of each node by learned weights $α^{i,j}$

5) At the end of search, replace mixed operation with most likely operation

2.2. Network

1) Network based on Cells. $Input_k = (Input_{k-1}, Input_{k-2})$

2) Parameter $step$ in Cell.

Input: $(s1, s0) = (Input_{k-1}, Input_{k-2})$,

when $step=0$, $(s0, s1)→s2$ (2 edges)

when $step=1$, $(s0, s1, s2)→s3$ (3 edges), select top-2 2dges when trained

when $step=2$, $(s0, s1, s2, s3)→s4$ (4 edges), select top-2 2dges when trained

Output: $Concat(s_k, s_{k-1}, …, s_{k-N})$

3) Code Link

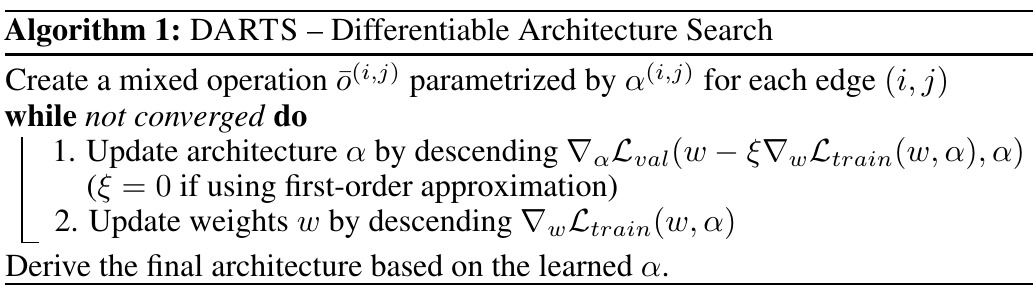

2.3 Optimization

1) jointly learn $α$ (validation set, Adam) and $w$ (training set, momentum SGD)

2) Approximate Architecture Gradient (hessian-vector product)

$ξ$. learning rate

($\theta$ is a copy from $w$)

update Copy Network $\theta$ → update $α$ → update Network $w$

(a) $\theta^* = \theta - η(momentum + d\theta + WeightDecay \times \theta)$ based on $(input_{train}, target_{train})$, $momentum$ from momentum SGD of network parameter $\theta$

(b) Based on $\theta^*$ and $(valid{train}, valid{train})$, $dα$ and $vector$ (grad of $\theta$)

(c) Get implicity grad based on $vector$ and $(input_{train}, target_{train})$

(d) $dα$ -= implicity grad

(e) update Network $w$ based on $(input_{train}, target_{train})$

3. Experiments

3.1. Details

- During searching, all BN’s affine is False