Keyword [EfficientNet]

Tan M, Le Q V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks[C]. international conference on machine learning, 2019: 6105-6114.

1. Overview

1.1. Motivation

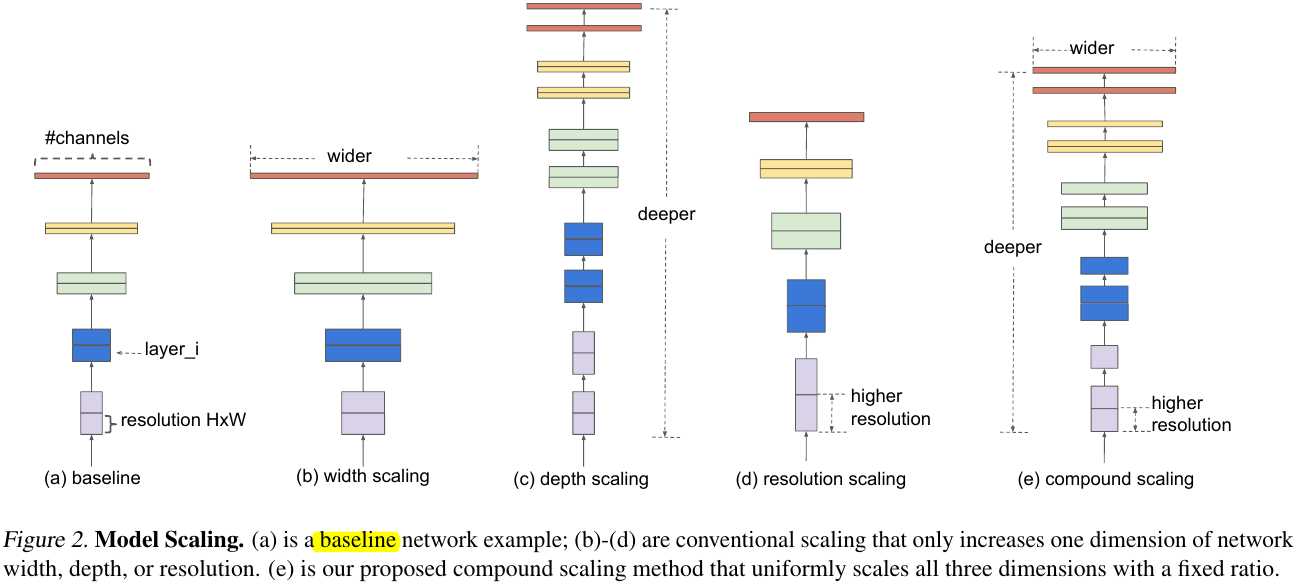

Existing methods only scale one dimension (depth, width, resolution) to design network.

In this paper, it proposes EfficientNet

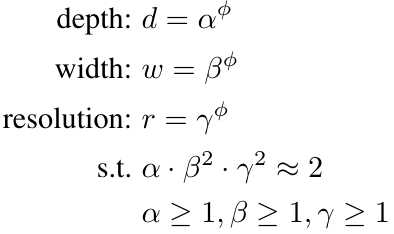

1) Compound Scaling Methods (depth $d$, width $w$, resolution $r$)

2) Search a baseline EfficientNet-B0→Fix $\phi$, small grid search for $d, w, r$.→Fix $d, w, r$, scale $\phi$ to get EfficientNet-B1 to B7.

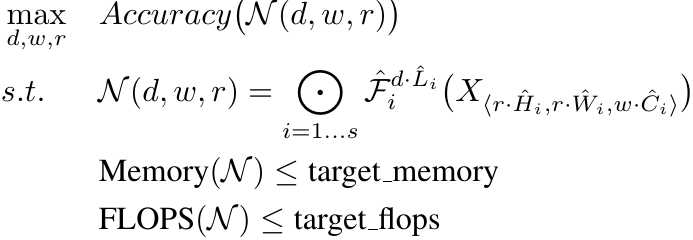

2. Compound Model Scaling

1) $N$. ConvNet

2) $i$. stage

3) $\hat{\mathcal{F}}_i^{d \cdot \hat{L_i}}$. repeated $d \cdot L_i$ times of layer $\mathcal{F}_i$ in stage $i$.

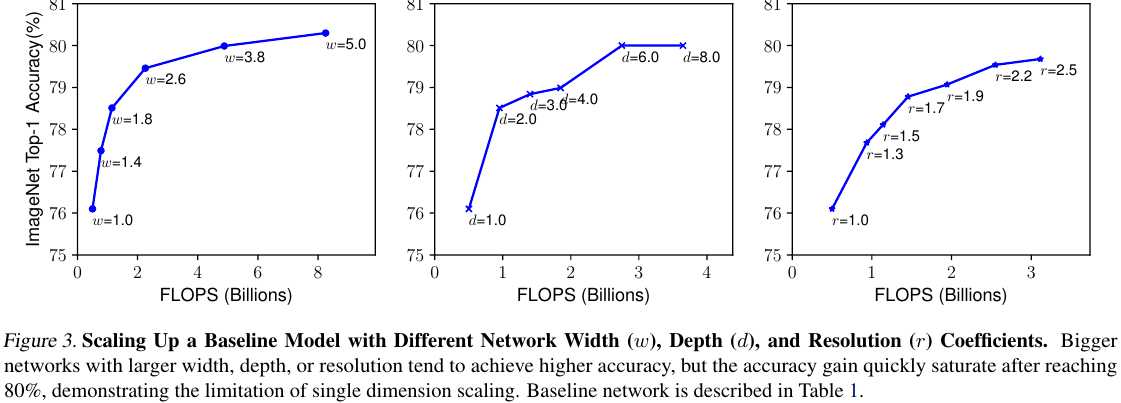

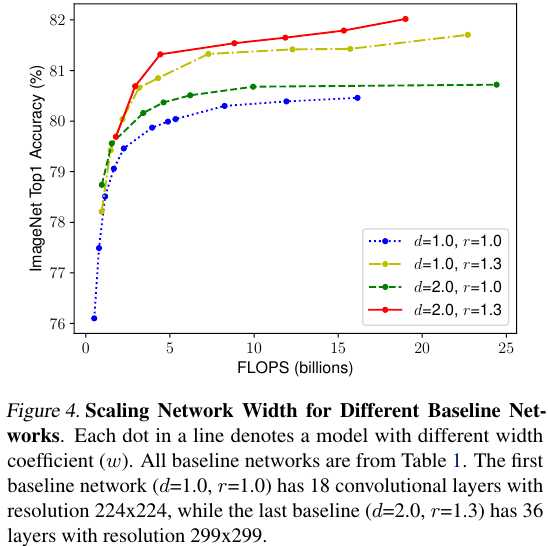

2.1. Observation

1) Scaling up any dimension of network width, depth, or resolution improves accuracy, but the accuracy gain diminishes for bigger models.

2) It is critical to balance all dimensions of network width, depth, and resolution during ConvNet scaling.

2.2. Compound Scaling Method

1) $\alpha, \beta, \gamma$ are constants, determined by small grid search.

2) $\phi$ is a user-specified coefficient, controling how many more resources are available for model scaling.

3. EfficientNet Architecture

3.1. Search for Baseline

1) Optimization Goal: $ACC(m) \times [ \frac{FLOPS(m)}{T} ]^w, w=-0.07$. $T$ is the target FLOPS

2) Produce EfficientNet-B0.

3.2. Adjust Parameters

1) Step 1. Fix $\phi=1$, do small grid search. $\alpha=1.2, \beta=1.1, \gamma=1.15$, under constraint of $\alpha \cdot \beta^2 \cdot \gamma^2 \approx 2$.

2) Step 2. Fix $\alpha, \beta, \gamma$, scale up Baseline with different $\phi$.

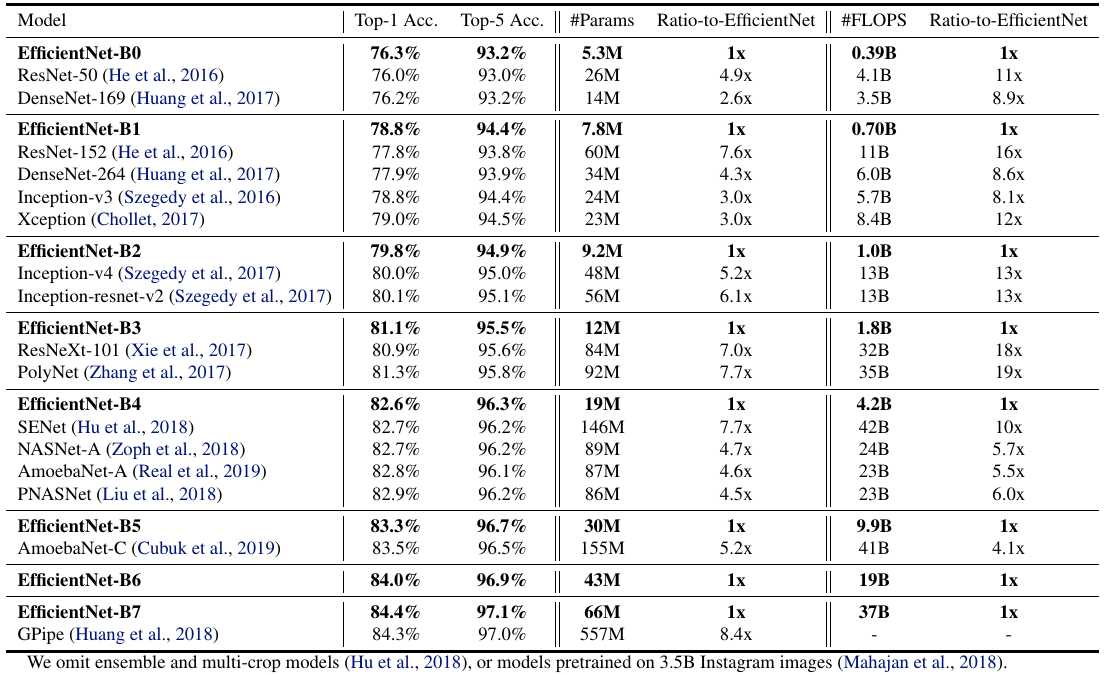

4. Experiments