Keyword [Compact Generalized Non-local]

Yue K, Sun M, Yuan Y, et al. Compact Generalized Non-local Network[C]. neural information processing systems, 2018: 6510-6519.

1. Overview

1.1 Motivation

1) Non-local Block take the correlations between the position while ignoreing channels.

2) Different categories often correspond to different channels.

In this paper, it proposes Compact Generalized Non-local Block (CGNL)

1) Generalize the NL block and take the correlations between the positions of any two channels into account.

2) Compact feature based on Taylor expansion.

3) Group feature channels before NL operation.

2. Review

2.1. Non-local Operation

1) $Y=f(\theta (X), \phi (X)) g(X) = XW_\theta W_\phi^TX^TXW_g$.

2) $\theta(X) = XW_\theta \in R^{N \times C}, \phi(X)=XW_\phi \in R^{N \times C}, g(X)=XW_g \in R^{N \times C}$.

2.2. Bilinear Pooling

1) $Z = X^TX \in R^{C \times C}$.

2) Can be seen as $\theta(X) = X^T \in R^{C \times N}, \phi(X) = X^T \in R^{C \times N}$.

3. Generalized Non-local Operation

3.1. Basic Modification

1) Merge channel into position

$\theta(X) = vec(XW_\theta) \in R^{NC}, \phi(X)=vec(XW_\phi) \in R^{NC}, g(X) = vec(XW_g) \in R^{NC}$

2) $vec(Y)=f(vec(XW_\theta), vec(XW_\theta)) vec(XW_g)$.

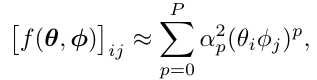

3.2. Compact Representation

1) $vec(Y) \approx \Phi \Theta^T g$.

2) $\Phi \Theta^T \in R^{NC \times NC}, P \ll NC$.

3) $z = \Phi^T g \in R^{P+1}$.

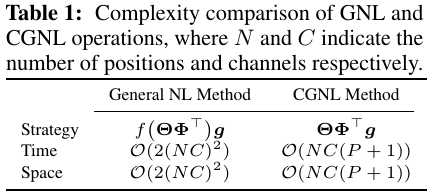

3.3. Complexity Analysis

3.4. Architecture

1) $Z = concat(BN(Y’ W_z)) + X$.

4. Experiments

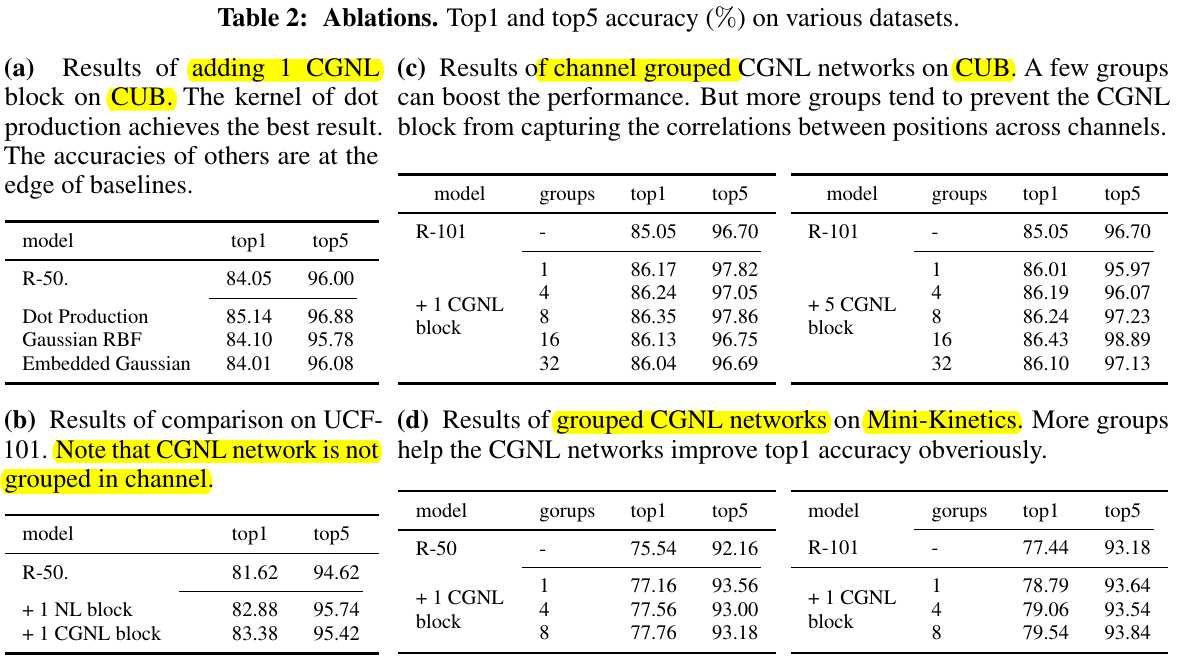

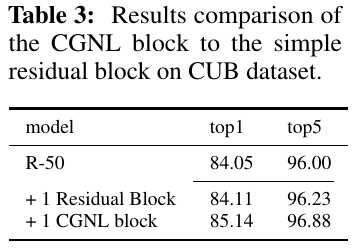

4.1. Ablation Study

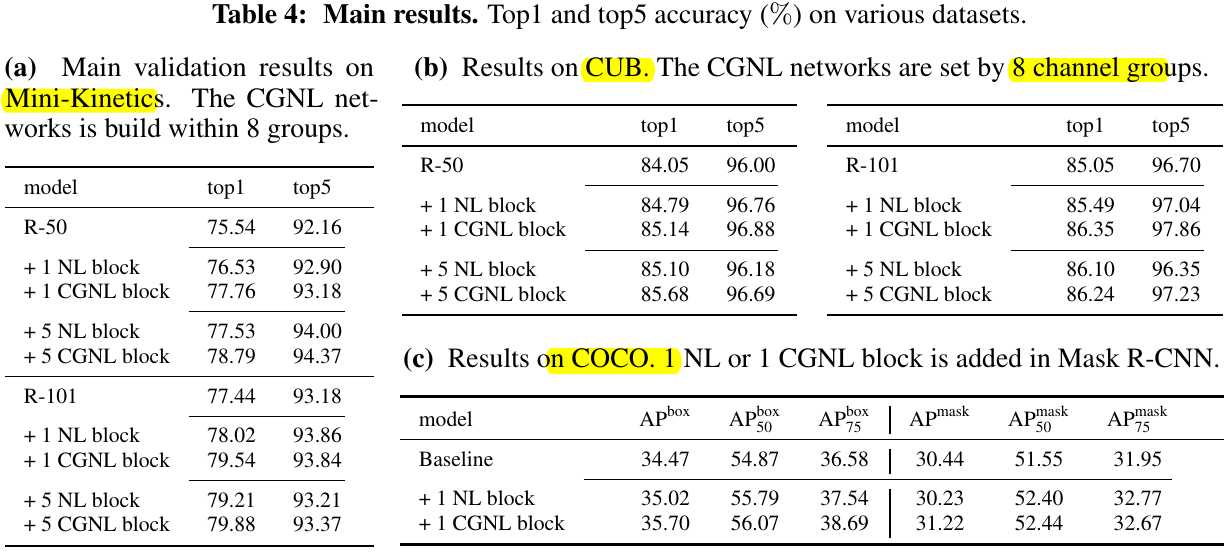

4.2. Comparison

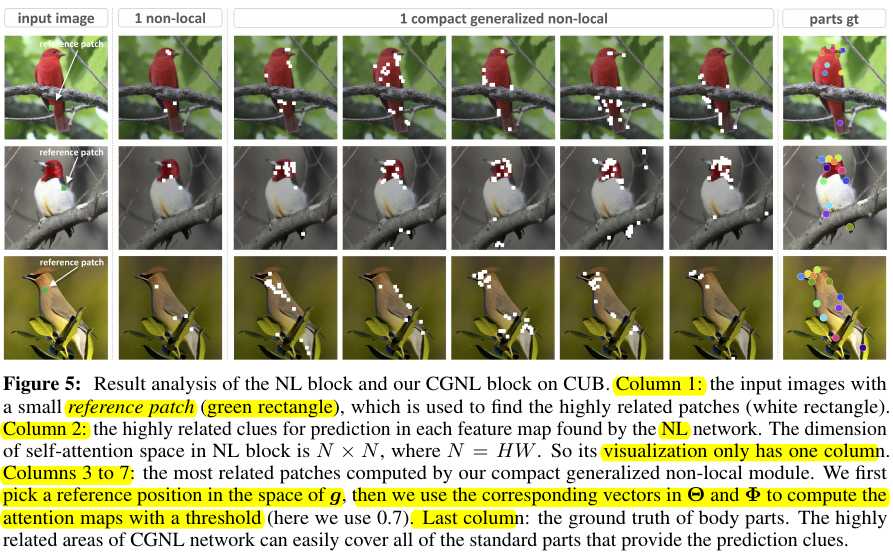

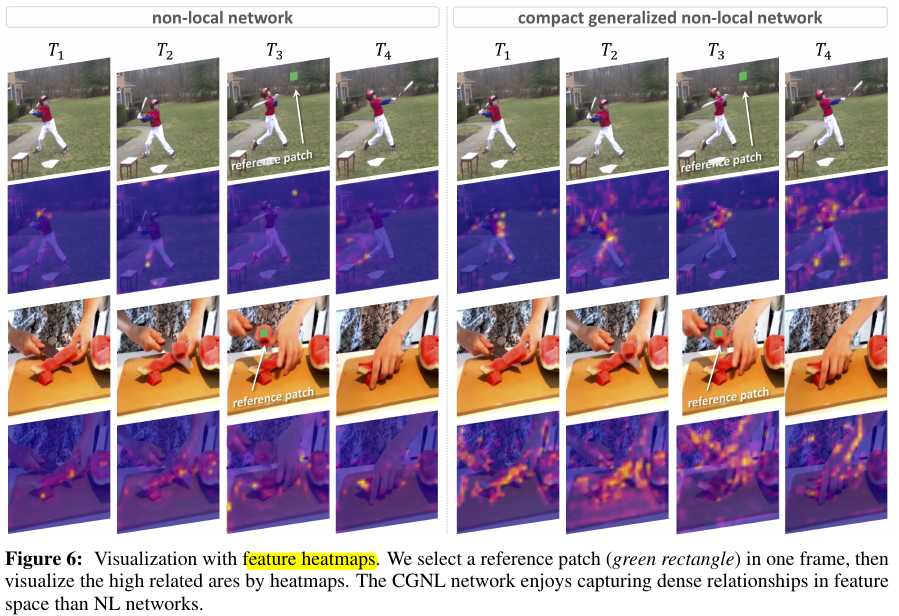

4.3. Visualization